Why Android Moved to 16KB Pages

In previous lectures, we learned how virtual memory gives each process its own isolated address space. We also learned how page tables translate virtual addresses into physical addresses, and how TLB caches recent translations to make this process fast.

We discussed fragmentation: Internal fragmentation is unused space inside an allocated page; external fragmentation is holes between allocated space.

4KB has long been the standard page size, but Google announced that from Android 15, it will support 16KB page size. Why would an operating system increase page size when that could increase internal fragmentation? Let’s trace the evolution of Android and see what has changed over the years.

Android’s Early Day

窮則變, 變則通 (Innovation happens under huge constraints)

Android is born at the time of 192MB RAM.

Small device, like a microwave controller, doesn’t need an MMU because it only runs a single application. It just uses raw physical addressing. But how do we support multi-tasking under extremely small memory? How to run Pokemon Go and Facebook at the same time???

Memory Poverty

Your current phone probably has 12~16GB of RAM. But, consider one of the very first Android phones in 2008, the HTC Dream (designed in Taiwan!).

It had 192MB of RAM! The challenge was clear: How do you run an entire OS and multiple applications on that? The constraints forced engineers to think about innovative memory management.

On your desktop, you can open dozens of browser tabs without problems. On early smartphones, “switching apps” can mean killing and releasing memory used by the previous app. But, how do you create the illusion of multitasking? When you go back to the previous app, how to quickly go back to the previous state? How did the OS decide which app to sacrifice if memory is almost running out? This required carefully process lifecycle management, low-memory killer mechanisms, and efficient context switching. Applications had to save their state quickly to avoid data loss.

Running Java

Android apps were written in Java (not in C/C++ like most embedded system). The programmer doesn’t need to malloc/free their memory like C++. Java’s runtime does automatic memory management. The challenge was making Java work on phones with small RAM and slow CPU without constant pauses for garbage collection or out-of-memory errors.

The material of this page is written after listening to this podcast: Android’s Unlikely Success. This is an interview with former Google engineer in charge of Android.

Apple’s iPhone doesn’t have this problem because Apple controls both the hardware and software. Before the Pixel phone, Google wasn’t selling hardware. They licensed Android to run on devices from dozens of manufacturers: HTC, Samsung, etc, which makes it difficult to do many optimization specific to certain hardware.

The 4KB Legacy

Historically, most systems, including x86 and Linux, use 4KB pages. This size dates back to the 1980s when physical memory was small and page tables had to fit in limited address space. Modern x86 systems use four-level page tables built around the 4KB unit, though they also support “huge pages” (2 MB or 1 GB) for special cases.

The 4KB page became the standard because it kind of balances page table overhead, TLB coverage, and internal fragmentation for the memory sizes of its time. But that balance point was chosen when main memory was measured in megabytes. Now, your smartphone easily has >12GB of DRAM.

Similar to the problem Google was facing, Intel and AMD cannot do many hardware optimization on x86-64 specific to certain software because they cannot control the OS running on top of it. If x86-64 suddenly switches its page size to 16KB, the vast software on top of it will be broken. Again, Apple doesn’t have this problem.

(Remember the PCID feature in TLB we mentioned in the lecture? This is indeed a hardware-software co-optimization feature because address space or process is a OS-level mechanism.)

Apple Silicon: 16KB Page

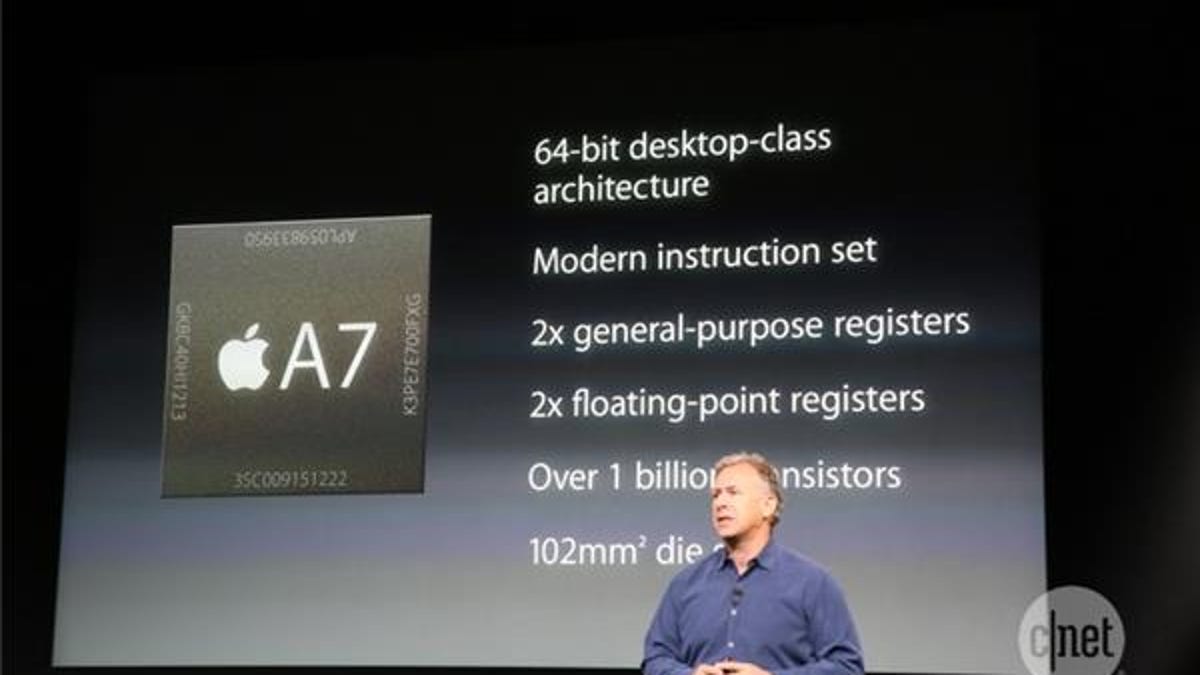

In 2013, Apple introduced the A7 chip (the first 64-bit ARM processor used in iPhones), which first used 16KB pages for iOS (and later on M-series chip for Mac). Because Apple controls the hardware (Apple Silicon), operating system (iOS and Mac OS X), it could enforce a single page size across all devices.

Larger pages reduce the number of entries needed in the page table and TLB. This increases the effective coverage of each TLB entry. From 4KB to 16KB, your TLB cover four times the memory, which means far fewer TLB misses and fewer slow, multi-level page table walks. Remember that PTE also occupies SRAM cache, so larger pages might also give us fewer cache misses.

Computer scientist Daniel Lemire compared memory performance between high-end Intel Skylake processors and Apple’s A12 mobile chip (iPhone XR’s CPU in 2018, also in iPad and iPad Air). The results were surprising. The A12 has better memory scalability compared to the Intel chip, and it achieved this while consuming far less power. Intel’s processors were more power-hungry but didn’t necessarily deliver better performance in memory-intensive workloads.

If you want to understand why larger page size improves performance, review a concept you might have learned in computer architecture class: Virtually Indexed, Physically Tagged (VIPT).

Modern CPU tries to look up the L1 cache at the same time as the TLB lookup. It can’t wait for the physical address. The CPU uses bits from the virtual address to find the “cache index” (the row) in L1 Cache, which is the VI part. Once it finds the row, it reads the “tag” and compares it to the physical address it gets from the TLB. This is the PT part, which makes sure that the cache hit accesses the correct memory. But this creates the synonyms problem.

With 4KB (\(2^{12}\)) pages, you have 12 bits of “safe” offset bits to use for your cache index. With 16KB (\(2^{14}\)) pages, you now have 14 bits. Those two extra bits (\(2^2 = 4\)) mean chip designers like Apple can build an L1 Data Cache that is 4x larger (or has 4x as many sets) without worrying about the synonym problem. This is one of the reason why Apple’s A-series and M-series chips have great memory performance.

Fragmentation

In the lecture, we mentioned that larger pages could lead to internal fragmentation. If a process uses only a small part of a 16KB page, is the rest wasted?

This is less of a concern with modern memory allocators. Android’s ART runtime and libc’s system malloc do not allocate a full 16KB page for every tiny object. Instead, they request large memory regions (called arenas or slabs) from the OS and then sub-divide those regions internally. This allows many small objects to share the same page.

This means the wasted space per page is usually minimal. While internal fragmentation technically increases, the significant performance gains from reduced TLB misses and better cache locality almost always outweigh this cost.

Hybrid pages?

Can we have a hybrid solution? As the textbook says:

Whenever you have two reasonable but different approaches to something in life, you should always examine the combination of the two to see if you can obtain the best of both worlds. We call such a combination a hybrid.

What if an OS used both 4KB pages (for small, fine-grained allocations) and 16KB or larger pages (for big, contiguous regions)?

Can we get the “best of both worlds”? Linux has tried this. Linux runs background daemon (khugepaged) to constantly scan memory. The daemon tries to find 4KB pages that can be compacted into 2MB pages. This features is called Transparent Huge Page (THP). However, in practice, this hybrid approach adds significant complexity. The benefits can be limited while the engineering cost is high. In chapter 5 of Mark Maniatis’s dissertation, the author looks at physical memory fragmentation on big HPC servers. For many server workloads, THP doesn’t provide enough benefit to justify the complexity. Simplicity is often preferred.

Recall from Worksheet 6 that modern CPUs have both fast performance cores (P-cores) and energy-efficient efficiency cores (E-cores). This Apple Silicon analysis shows that across the M1 to M4 families, the P-core L1 ITLB has 192 entries while the E-core has 128 entries.

Because these cores have different TLB and cache structures, migrating a process between them is not trivial. The operating system must flush the TLB when it migrates a process or perform context switch to clear out old, invalid translations.

Managing a hybrid page system (e.g., 4KB + 16KB pages) would be overly complicated. Apple chose simplicity.

Android in the age of abundance

By 2025, the situation is the opposite of 2008. Phones now have 8 GB or more of RAM, large caches, and multi-core processors. Hardware constraints have shifted from scarcity to efficiency: how to make the system faster and more power-efficient.

Android 15 introduces support for 16KB pages. Google reports the benefits:

- 3.16% lower app start time.

- 4.56% reduced power draw during app launch.

- Faster camera launch.

- Faster system boot time.

The improvements a result of fewer TLB misses, better cache utilization, and fewer page table lookups. The slight increase in memory usage is ok.

However, using a larger page size doesn’t mean we can suddenly waste memory. What’s different is that today’s hardware can support apps and games that use gigabytes of memory and depend on heavy GPU acceleration (史詩電影等級的華麗手遊,不再只是電競PC的專利). Graphics-fancy games push the limits of address translation speed, so improving that part of the system becomes a key optimization.

16KB Page’s Page Table Structure

Recall that in the lecture we’ve introduced x86-64’s 4-level page table, which can map a 48-bit virtual address space (256TB!! Who could use so much memory??) Let’s compare that with ARMv8’s 16KB 3-level page table.

| Level | Name | Index Bits | Entries | Maps |

|---|---|---|---|---|

| L4 | PML4 | 9 | 512 | Points to PDPT |

| L3 | PDPT | 9 | 512 | Points to PD |

| L2 | PD | 9 | 512 | Points to PT |

| L1 | PT | 9 | 512 | Physical pages |

| Offset | — | 12 bits | — | — |

Each entry is 8 bytes, so every page table fits exactly in one 4 KB page (512 × 8B = 4096B).

Android 15 adopts 16 KB pages on ARM processors. The page table only has three levels.

| Level | Name | Index Bits | Entries | Maps |

|---|---|---|---|---|

| L2 | Level 2 Table | 9 | 512 | Points to Level 1 |

| L1 | Level 1 Table | 9 | 512 | Points to Level 0 |

| L0 | Level 0 Table | 9 | 512 | Physical pages |

| Offset | — | 14 bits | — | — |

Total = 9 × 3 + 14 = 41 bits (support 2 TB virtual address space, enough for mobile devices). Each table entry maps 8 MB instead of 2 MB (512 entries × 16 KB).

| Property | x86-64 (4 KB) | ARM64 (16 KB) |

|---|---|---|

| Offset bits | 12 | 14 |

| Levels | 4 | 3 |

| Entries per table | 512 | 512 |

| One table covers | 2 MB | 8 MB |

| Typical VA space | 48 bits (256 TB) | 41 bits (2 TB) |

| Page-table walk cost | Higher | Lower |

| Page-table memory use | Larger | Smaller |

Now, I hope I have fully convinced you why a bigger page improves MMU’s address translation efficiency so much.

Lessons for You

For decades, 4KB pages were a safe and universal choice. As memory grows and the impact of cache miss or TLB miss become relatively larger, that assumption is shaking. Apple’s ecosystem has already shown the benefit; Android is beginning to follow as its hardware ecosystem matures.

When you design systems, remember that constants like “page size” are not sacred. They are engineering choices made under past constraints. As those constraints change (larger DRAM, deeper caches, more complex memory hierarchies), our design choices must change as well.Review Questions