Event-Driven I/O

Multi-threading is useful for two main purposes: parallelizing CPU-intensive tasks and concurrently handling I/O-bound workloads. In this lab, we will focus on the latter, where threads spend most of their time waiting for I/O operations to complete. How can we serve a large number of I/O-bound tasks using the least amount of system resources?

Use Event-Driven I/O!

A classic example I mentioned is PTT, which ran on 杜奕瑾’s dormitory PC back in the 1990s. Amazingly, it was able to support over 100,000 users. How was that possible?

Each user’s browser maintains a connection to the server, but most of the time that connection just waits for a click, or some other user action. If we were to assign one thread per user session, we would quickly run out of memory and CPU resources because of the overhead of managing thousands of threads.

Event-driven I/O multiplexes many connections onto a small number of threads. When a particular connection becomes active, such as when data arrives or a user clicks something, the OS notifies the server to handle that event. The server processes it briefly and then goes back to waiting for the next event.

Let’s go!

Set up

Open you have opened in Github Codespace, install the necessary packages in the terminal:

pip3 install streamlit waitress aiohttp趴1: Running a Fun Event-IO Web Server

Let’s run a fun Streamlit app. Streamlit is backed by an event-driven IO framework called Tornado. Let’s observe how Tornado uses We use Github Codespace will open a pop-up menu in the bottom right and provide a specific forwarded URL. Click that! Go back to your terminal and stop the server by pressing Now, open the log file you got: Search the log for What is the file descriptor (fd) number for your server’s main listening socket? (In the example above, it’s Now that you know the server’s main When you clicked the button, did you see the balloons flying up? The epoll to handle network connections and events.1. Clear Python Cache

find ~/.local/ | grep -E "(/__pycache__$|\.pyc$|\.pyo$)" | xargs rm -rf2. Run the Server

strace to see what system calls are made inside streamlit.strace -f -o log_streamlit.txt \

-e trace=epoll_wait,epoll_ctl,openat,listen,accept,bind \

streamlit run streamlit_fun.py --server.address=0.0.0.0 --server.port=80793. In Your Browser

4. Clean Up

Ctrl+C.Analyze

strace’s outputlog_streamlit.txt. Use ctrl-F or cmd-F to search for string in the file.bind. You’ll find a line showing the server binding to port 8079. It will look something like this: 11280 bind(6, {sa_family=AF_INET, sin_port=htons(8079), ...}, 16) = 06)fd, search for the epoll_ctl call that adds it to the list of things to monitor. Can you find the line that looks like epoll_ctl(..., EPOLL_CTL_ADD, <your_fd_from_step_1>, ...)? The server tells the OS: “Please start monitoring this socket (<your_fd_from_step_1>) for new connections.”epoll_wait woke up when you clicked the button and the server ran the code in balloons.py that pop up the balloons. Search the log for the “balloons.py” string. You should see a openat system call.

趴2: Web server benchmark

Now, let’s compare different approaches to handling concurrent HTTP requests. We’ll measure the throughput and the response time distribution.

We will be using the hey benchmark tool to simulate users. All our servers will simulate a 20ms (0.02s) I/O wait (like a disk or database query). Download hey first:

wget https://hey-release.s3.us-east-2.amazonaws.com/hey_linux_amd64 -O hey && chmod +x ./heyWe will run two benchmarks:

- Low Concurrency:

./hey -n 200 -c 4 http://localhost:PORT(4 users at once) - High Concurrency:

./hey -n 200 -c 16 http://localhost:PORT(16 users at once)

Server A: Baseline (Single-Thread, Blocking)

This server can only handle one request at a time, sequentially.

Run the server in one terminal window: python3 server.py (it will listen on port 8080). Open a new terminal window in VS Code by clicking the “+” sign. Run hey:

./hey -n 200 -c 4 http://localhost:8080

./hey -n 200 -c 16 http://localhost:8080Look at the latency distribution. Where is the peak? Notice how the latency explodes when you run hey with high concurrency.

Server B: Thread Pool

We use the waitress to implement a thread pool with 8 worker threads. Thread pool means I create the threads beforehand, so when connection arrives, the main thread can directly assign one worker thread to handle the connection without waiting for a new thread to be created.

Run the server in one terminal window: python3 server_threadpool.py (it will listen on port 8081). Open a new terminal window in VS Code by clicking the “+” sign. Run hey:

./hey -n 200 -c 4 http://localhost:8081

./hey -n 200 -c 16 http://localhost:8081How does the latency distribution change when you go from 4 concurrent users to 16?

Server C: AsyncIO (Single-Thread, Non-Blocking)

Run the server in one terminal window: python3 server_asyncio.py (it will listen on port 8082) Open a new terminal window in VS Code by clicking the “+” sign. Run hey:

./hey -n 200 -c 4 http://localhost:8082

./hey -n 200 -c 16 http://localhost:8082Does the latency distribution change when you go from 4 to 16 concurrent users?

趴3:Analysis

Let’s analyze your benchmark data. You should have something that looks like this:

| Server Model | Concurrency (C) | Median Latency (Your Data) |

|---|---|---|

| A: Baseline | 4 | ~0.08s |

| A: Baseline | 16 | Very High (e.g., > 0.4s) |

| B: Thread Pool | 4 | ~0.02s |

| B: Thread Pool | 16 | ~0.04s |

| C: AsyncIO | 4 | ~0.02s |

| C: AsyncIO | 16 | ~0.02s |

Food for Thoughts

Remember that all our servers add a 20ms (0.02s) sleep to each request handling. Try to reason about the number you see from hey. It can vary due to the number of CPU cores available as well.

- Server A (Baseline): Why did the latency for the

c=4run make sense (Hint: 4 * 0.02s)? Why did thec=16latency get so much worse, far more than just 16 * 0.02s? (Hint: “Check M/M/1 waiting behavior here”) - Server B (Thread Pool): Why was the latency for

c=4so good (~0.02s)? (Hint: How many threads did we have?) - Server B (Thread Pool): Why did the median latency for Server B double to ~0.04s when concurrency hit 16? (Hint: We had 16 concurrent requests, but only 8 worker threads. What happens to requests 9-16?) (Remember we talked about Queue Depth (QD) in SSD? If you increase QD more than *the number of worker inside SSD, your bandwidth will saturate and the queuing delay will grow.)

- Server C (AsyncIO): Why did the median latency for the single-threaded

asyncioserver stay low at ~0.02s, even with 16 concurrent requests? - Suppose you want to run your own Line Bot web server in your dormitory PC. Suppose you expect the server would handle at most 1000 chat connections, which model (Thread Pool or AsyncIO) would you choose, and why?

Bonus

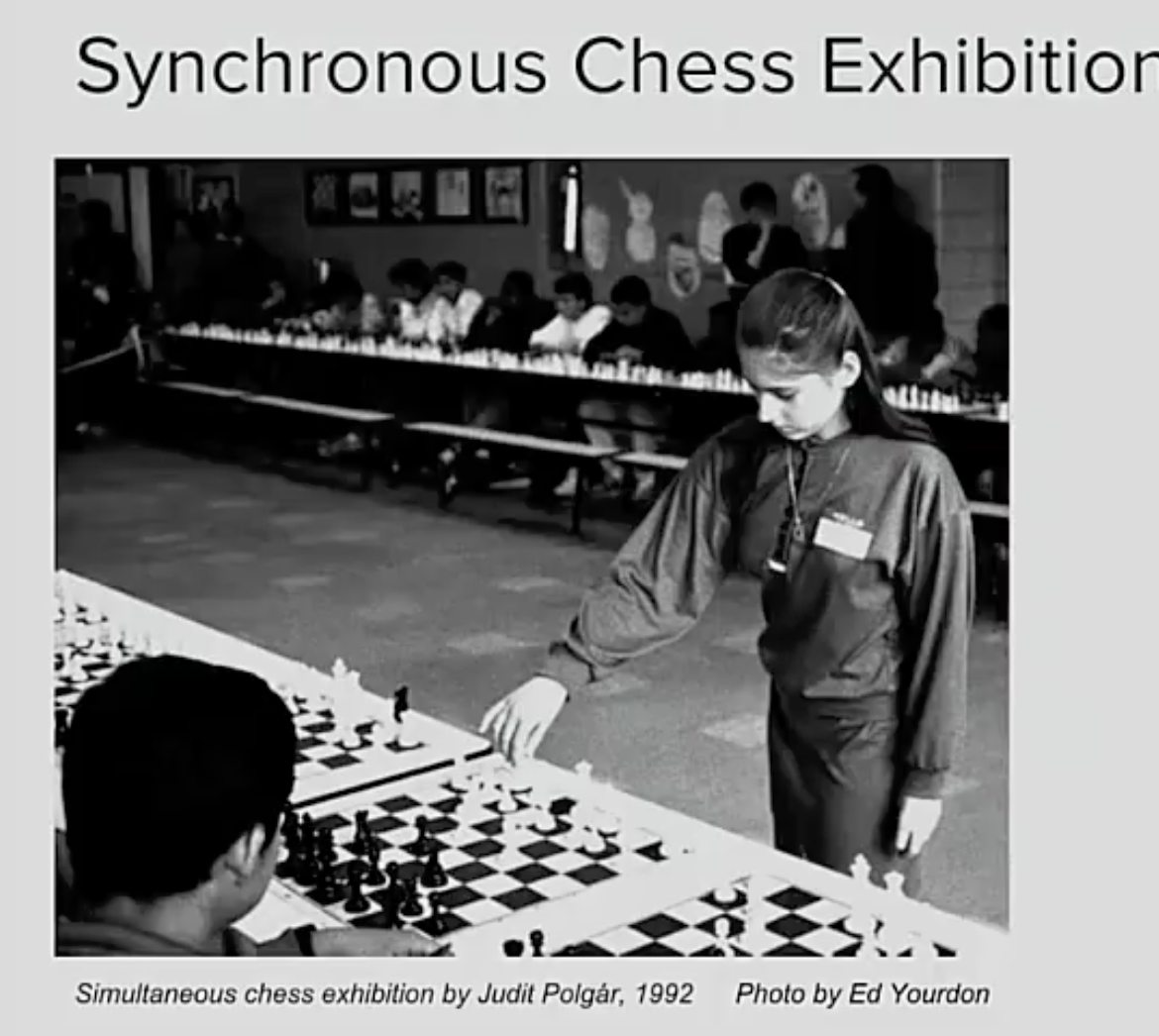

A wonderful explanation of synchronous v.s asynchronous I/O using the story of chess master Judit Polgár: